GPU Hosting Plans Recommended

Advanced GPU Dedicated Server - RTX 3060 Ti

- 128GB RAM

- GPU: GeForce RTX 3060 Ti

- Dual 12-Core E5-2697v2

- 240GB SSD + 2TB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- Single GPU Specifications:

- Microarchitecture: Ampere

- CUDA Cores: 4864

- Tensor Cores: 152

- GPU Memory: 8GB GDDR6

- FP32 Performance: 16.2 TFLOPS

Basic GPU Dedicated Server - RTX 5060

- 64GB RAM

- GPU: Nvidia GeForce RTX 5060

- 24-Core Platinum 8160

- 120GB SSD + 960GB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- Single GPU Specifications:

- Microarchitecture: Blackwell 2.0

- CUDA Cores: 4608

- Tensor Cores: 144

- GPU Memory: 8GB GDDR7

- FP32 Performance: 23.22 TFLOPS

Professional GPU VPS - A4000

- 30GB RAM

- 24 CPU Cores

- 320GB SSD

- 300Mbps Unmetered Bandwidth

- Once per 2 Weeks Backup

- OS: Linux / Windows 10

- Dedicated GPU: Quadro RTX A4000

- CUDA Cores: 6,144

- Tensor Cores: 192

- GPU Memory: 16GB GDDR6

- FP32 Performance: 19.2 TFLOPS

Advanced GPU Dedicated Server - A5000

- 128GB RAM

- GPU: Nvidia Quadro RTX A5000

- Dual 12-Core E5-2697v2

- 240GB SSD + 2TB SSD

- 100Mbps-1Gbps

- OS: Windows / Linux

- Single GPU Specifications:

- Microarchitecture: Ampere

- CUDA Cores: 8192

- Tensor Cores: 256

- GPU Memory: 24GB GDDR6

- FP32 Performance: 27.8 TFLOPS

Enterprise GPU Dedicated Server - RTX 4090

- 256GB RAM

- GPU: GeForce RTX 4090

- Dual 18-Core E5-2697v4

- 240GB SSD + 2TB NVMe + 8TB SATA

- 100Mbps-1Gbps

- OS: Windows / Linux

- Single GPU Specifications:

- Microarchitecture: Ada Lovelace

- CUDA Cores: 16,384

- Tensor Cores: 512

- GPU Memory: 24 GB GDDR6X

- FP32 Performance: 82.6 TFLOPS

Advanced GPU VPS - RTX 5090

- 90GB RAM

- 32 CPU Cores

- 400GB SSD

- 500Mbps Unmetered Bandwidth

- Once per 2 Weeks Backup

- OS: Linux / Windows 10/ Windows 11

- Dedicated GPU: GeForce RTX 5090

- CUDA Cores: 21,760

- Tensor Cores: 680

- GPU Memory: 32GB GDDR7

- FP32 Performance: 109.7 TFLOPS

Enterprise GPU Dedicated Server - RTX 5090

- 256GB RAM

- GPU: GeForce RTX 5090

- Dual 18-Core E5-2697v4

- 240GB SSD + 2TB NVMe + 8TB SATA

- 100Mbps-1Gbps

- OS: Windows / Linux

- Single GPU Specifications:

- Microarchitecture: Blackwell 2.0

- CUDA Cores: 21,760

- Tensor Cores: 680

- GPU Memory: 32 GB GDDR7

- FP32 Performance: 109.7 TFLOPS

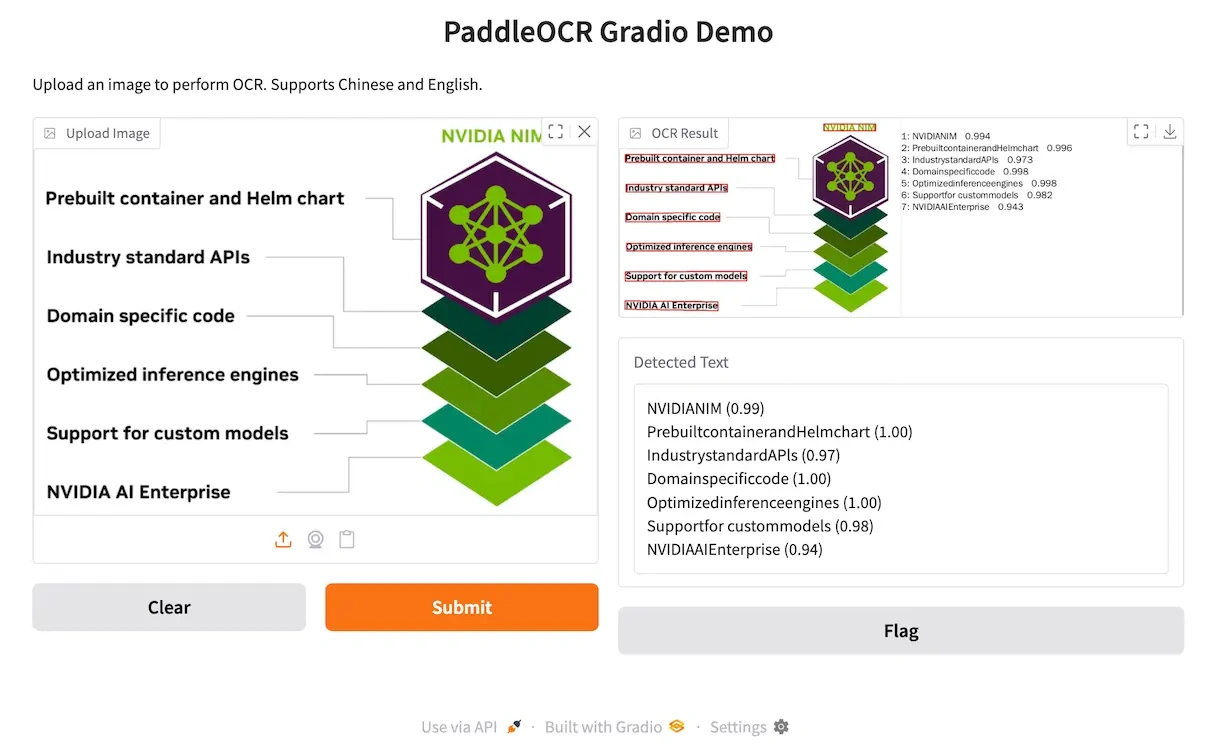

Key Features of Hosted PaddleOCR

End-to-end pipeline support

Multilingual & multi-script support

Document parsing & structure extraction

Flexible deployment & scaling

Typical Use-Cases of PaddleOCR

Invoice, Receipt & Contract Automation

Extract text, tables and key amounts from scanned invoices, receipts or contracts — automate back-office workflows, reduce manual data entry and speed up processing.

Multilingual Document Digitisation

Digitise paper archives, international forms and multilingual content into searchable text/data — supporting multiple languages and scripts for global deployments.

Content Pipeline for AI / LLM Workflows

Use OCR to pre-process images or PDFs into structured text that feeds large language models, knowledge graphs or downstream AI applications.

Real-time Scene Text Extraction

Extract text from camera input, signage or UI screens in real-time or near-real-time. Ideal for embedded/edge GPU setups and dynamic text environments.

Table / Formula Extraction for Research

Parse complex documents containing tables, charts or formulas and convert them into structured data for research, analysis or academic workflows.

FAQs of PaddleOCR Hosting

What is PaddleOCR?

Can I self-host later or bring my own model?

What kind of latency and throughput can I expect?

Is commercial usage allowed?

What do I get in the free pre-installation service?

What languages are supported?

What infrastructure do I need?

Can you help with fine-tuning or custom model deployment?

Ready to Scale Your OCR Infrastructure?

Secure your GPU-powered PaddleOCR hosting now — guaranteed low latency, full multilingual support, and enterprise scalability.

Get Started Today